Please Note: An updated, and maintained, version of this content is available on Github as a Jupyter notebook.

Explore LAT Data

It is recommended that the user first gain some basic familiarity with the structure and content of the LAT data products, as well as the process for preparing the data by making cuts on the data file. This tutorial demonstrates various ways of examining and manipulating the LAT data, but it is a simple approach useful for quickly exploring the data.

IMPORTANT! In almost all cases, light curves and energy spectra need to be produced by a Likelihood Analysis using gtlike for robust results.

In addition to the Fermitools, you will also be using the following FITS File Viewers:

- ds9 (image viewer); download and install from: http://hea-www.harvard.edu/RD/ds9

- fv (view images and tables; can also make plots and histograms;

download and install from: http://heasarc.gsfc.nasa.gov/docs/software/ftools/fv

You can download this tutorial as a Jupyter notebook and run it interactively. Please see the instructions for using the notebooks with the Fermitools.

Data Files

Some of the files used in this tutorial were prepared within the Data Preparation tutorial, and are linked here:

- 3C279_region_filtered_gti.fits (16.6 MB) - a 20 degree region around the blazar 3C 279, with appropriate selection cuts applied

- spacecraft.fits (67.6 MB) - the spacecraft data file for 3C 279

Alternatively, you can select your own region and time period of interest from the LAT data server and substitute that. Photon and spacecraft data files are all that you need for the analysis.

1. Exploring the Data

In this section we look at several different ways to explore the data and make quick plots and images of the data. First, we'll look at making quick counts maps with ds9; then we'll perform a more in depth exploration of the data using fv.

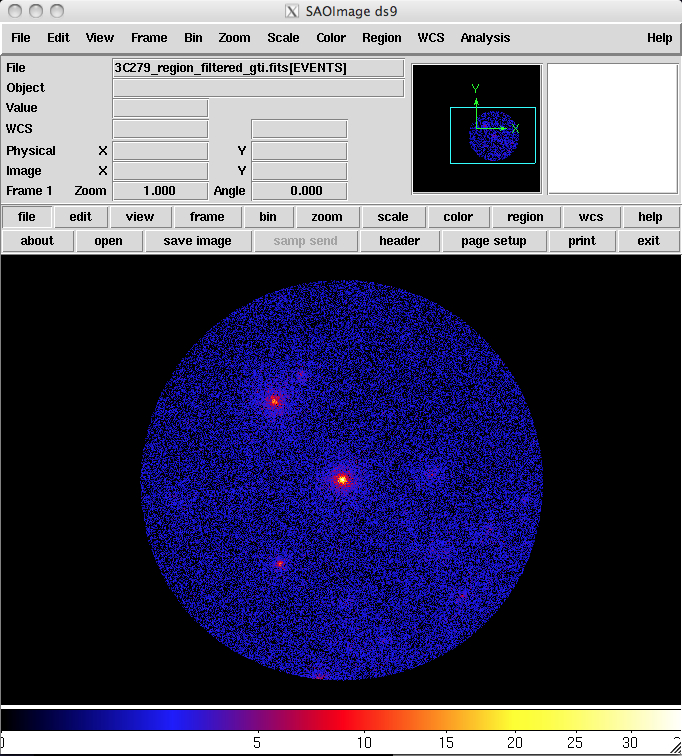

ds9 Quick look:

To see the data use ds9 to create a quick counts maps of the events in the file.

For example, to look at the 3C 279 data file, type the following command:

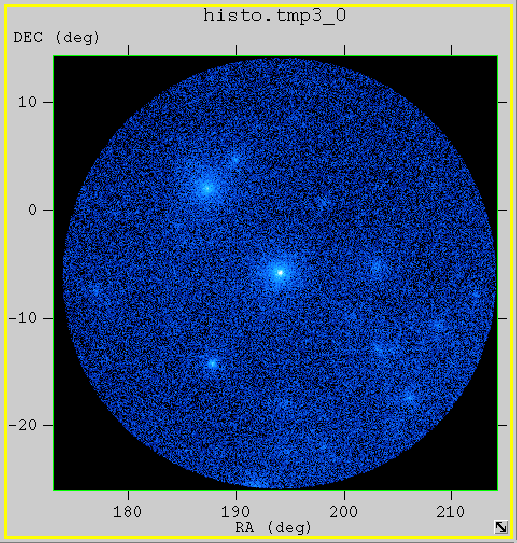

prompt> ds9 -bin factor 0.1 0.1 -cmap b -scale sqrt 3C279_region_filtered_gti.fits &

A ds9 window will open up and an image similar to the one shown below will be displayed.

Breaking the command line into its parts, we find that:

- ds9 - Invokes the command.

- -bin factor 0.1 0.1 - Tells ds9 that the x and y bin sizes are to be 0.1 units in each direction. Since we will be binning on the coordinates (RA, DEC), this means we will have 0.1 degree bins.

Note: The default factor is 1, so if you leave this off the command line you will get 1 degree bins

- -cmap b - Tells ds9 to use the "b" color map to display the image. This is completely optional and the choice of map "b" represents the personal preference of the author. If left off, the default color map is "gray" (a grayscale color map).

- -scale sqrt - Tells ds9 to scale the colormap using the square root of the counts in the pixels. This particular scale helps to accentuate faint maxima where there is a bright source in the field as is the case here. Again this is the author's personal preference for this option. If left off, the default scale is linear.

- & - backgrounds the task, allowing continued use of the command line.

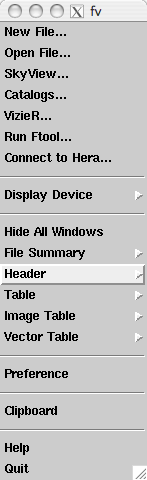

Exploring with fv:

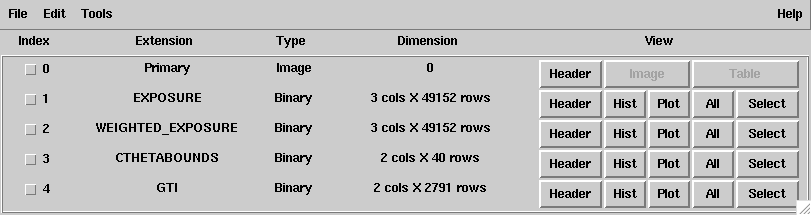

fv gives you much more interactive control of how you explore the data. It can make plots and 1D and 2D histograms; allow you look at the data directly; and enable you to view the FITS file headers to look at some of the important keywords. Starting it up is easy, just type fv and the filename:

prompt> fv 3C279_region_filtered_gti.fits &

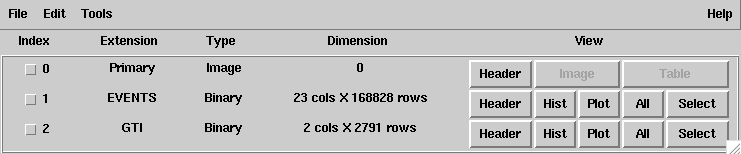

This will bring up two windows: the general fv menu window (at right); and another window shown below, which contains summary information about the file you are looking at:

For the purposes of the tutorial, we will only be using the summary window, but feel free to explore the options in the main menu window as well.

Looking at the summary window, notice that:

- There are three FITS extensions (primary, EVENTs & GTI). This is what should be there. If you don't see three, there is something wrong with your file.

- There are 168828 events in the filtered 3C279 file (the number of rows in the EVENTS extension), and 22 pieces of information (the number of columns) for each event.

- There are 2791 GTI entries.

From this window, data can be viewed in different ways:

- For each extension, the FITS header can be examined for keywords and their values.

- Histograms and plots can be made of the data in the EVENTS and GTI extensions.

- Data in the EVENTS or GTI extensions can also be viewed directly.

Let's look at each of these in turn.

Viewing an Extension Header:

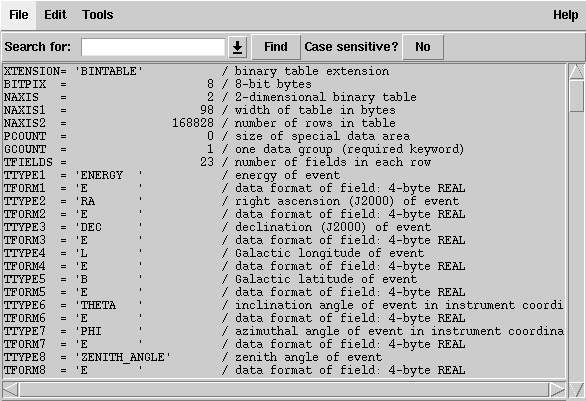

Click on the ![]() button for the EVENTS extension; a new window listing all the header keywords and their values for this extension will be displayed. Notice that the same information is presented that was shown in the summary window; namely that: the data is a binary table (XTENSION='BINTABLE'); there are 123857 entries (NAXIS2=123857); and there are 22 data values for each event (TFIELDS=22).

button for the EVENTS extension; a new window listing all the header keywords and their values for this extension will be displayed. Notice that the same information is presented that was shown in the summary window; namely that: the data is a binary table (XTENSION='BINTABLE'); there are 123857 entries (NAXIS2=123857); and there are 22 data values for each event (TFIELDS=22).

In addition, there is information about the size (in bytes) of each row and the descriptions of each of the data fields contained in the table.

As you scroll down, you will find some other useful information as shown in the screen shot below:

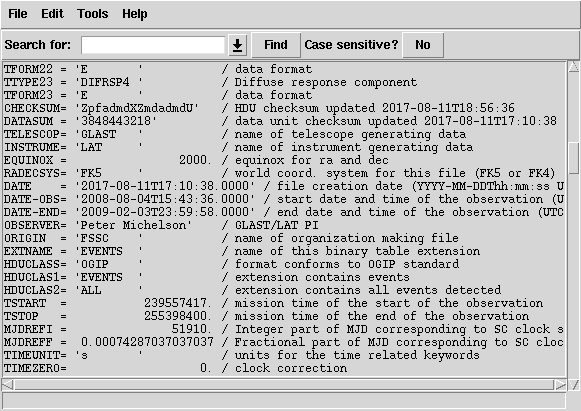

There are some fairly important keywords on this screen, including:

- DATE - The date the file was created.

- DATE-OBS - The starting time, in UTC, of the data in the file.

- DATE-END - The ending time, in UTC, of the data in the file.

- TSTART - The equivalant of DATE-OBS but in Mission Elapsed Time (MET). Note: MET is the time system used in the event file to time tag all events.

- TSTOP - The equivalant of DATE-END in MET.

- MJDREF - The Modified Julian Date (MJD) of the zero point for MET. This corresponds to midnight, Jan. 1st, 2001 for FERMI.

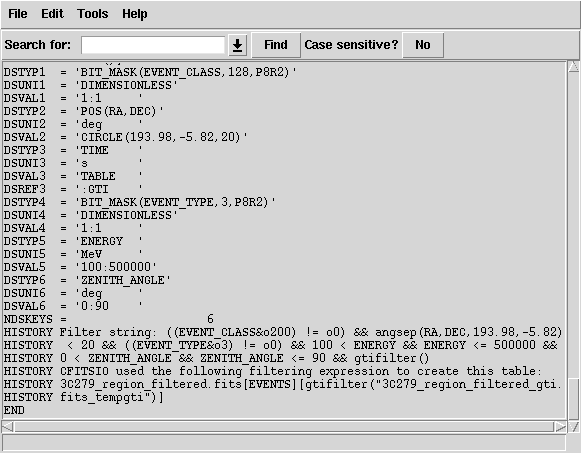

Finally, as you scroll down to the bottom of the header, you will see:

This part of the header contains information about the data cuts that were made to extract the data. These are contained in the various DSS keywords. For a full description of the meaning of these values see the DSS keyword page. In this file the DSVAL1 keyword tells us which kind of event class has been used, the DSVAL2 keyword tells us that the data was extracted in a circular region, 20 degrees in radius, centered on RA=193.98 and DEC=-5.82. The DSVAL3 keyword shows us that the valid time range is defined by the GTIs. The DSVAL4 keywords shows the selected energy range in MeV, and DSVAL5 indicates that a zenith angle cut has been defined.

Making a Counts Map:

fv can also be used to make a quick counts map to see what the region you extracted looks like. To do this:

- In the summary window for the FITS file, click on the Hist button for the EVENTS extension. A Histogram window will open.

Note: We use the histogram option rather than the plot option, as that would produce a scatter plot.

- From the X column's drop down menu in the column name field, select RA.

fv will automatically fill in the TLMin, TLMax, Data Min and Data Max fields based on the header keywords and data values for that column. It will also make guesses for the values of the Min, Max and Bin Size fields.

- From the Y column's drop down menu in the column name field, select DEC from the list of columns.

- Select the limits on each of the coordinates in the Min and Max boxes.

In this example, we've selected the limits to be just larger than the values in the Data Min and Data Max field for each column.

- Set the bin size for each column (in the units of the respective column; in this case we used 0.1 degrees).

For this map, we've selected 0.1 degree bins.

- You can also select a data column from the FITS file to use as a weight if you desire.

For example, if you wanted to make an approximated flux map, you could select the ENERGY column in the Weight field and the counts would be weighted by their energy.

- Click on the "Make" button to generate the map.

This will create the plot in a new window and keep the histogram window open in case you want to make changes and create a different image. The "Make/Close" button will create the image and close the histogram window.

fv also allows you to adjust the color and scale, just as you can in ds9. However, it has a different selection of color maps.

As in ds9, the default is gray scale. The image at right was displayed with the cold color map, selected by clicking on the "Colors" menu item, then selecting: "Continuous" submenu --> "cold" check box.

2. Binning the Data

While ds9 and fv can be used to make quick look plots when exploring the data, they don't automatically do all the things you would like when making data files for analysis. For this, you will need to use the Fermi-specific gtbin tool to manipulate the data.

You can use gtbin to bin photon data into the following representations:

- Images (maps)

- Light curves

- Energy spectra (PHA files)

This has the advantage of creating the files in exactly the format needed by the other science tools, as well as by other tools such as XSPEC; and of adding correct WCS keywords to the images, so that the coordinate systems are properly displayed when using images viewers (such as ds9 and fv) that can correctly interpret the WCS keywords.

In this section we will use the 3C279_region_filtered_gti.fits file to make images and will look at the results with ds9. In the Explore LAT Data (for Burst) section we will show how to use gtbin to produce a light curve.

Just as with fv and ds9, gtbin can be used to make counts maps out of the extracted data.

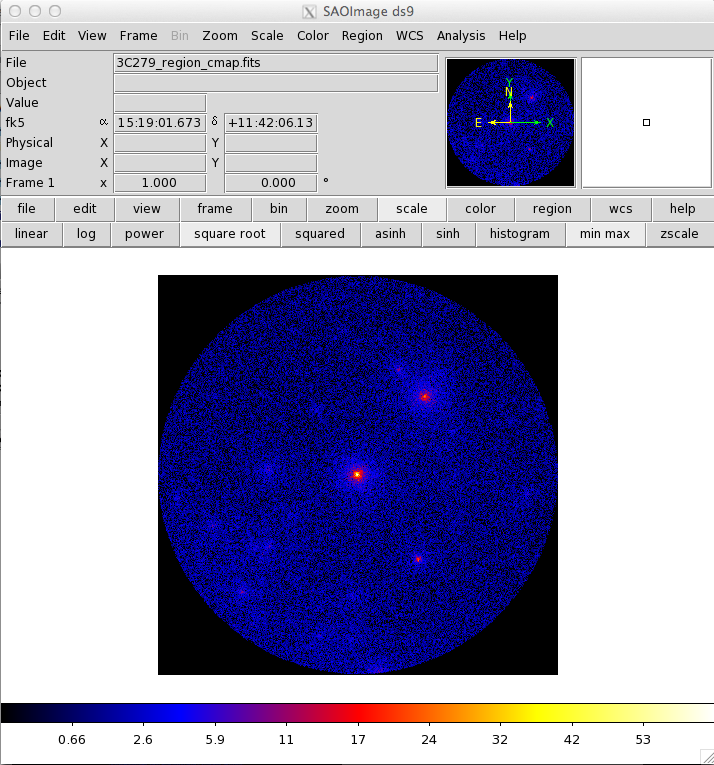

The main advantage of using gtbin is that it adds the proper header keywords, so that the coordinate system is properly displayed as you move around the image. Here we'll make the same image of the anti-center region that we make with fv and ds9, but this time we'll use the gtbin tool to make the image.

gtbin is invoked on the command line with or without the name of the file you want to process. If no file name is given, gtbin will prompt for it. Here it is the input and output from running the task:

prompt> gtbin

Type of output file (CCUBE|CMAP|LC|PHA1|PHA2) [] CMAP

Event data file name[] 3C279_region_filtered_gti.fits

Output file name[] 3C279_region_cmap.fits

Spacecraft data file name[] NONE

Size of the X axis in pixels[] 400

Size of the Y axis in pixels[] 400

Image scale (in degrees/pixel)[] 0.1

Coordinate system (CEL - celestial, GAL -galactic) (CEL|GAL) [CEL]

First coordinate of image center in degrees (RA or galactic l)[] 193.98

Second coordinate of image center in degrees (DEC or galactic b)[] -5.82

Rotation angle of image axis, in degrees[0.]

Projection method e.g. AIT|ARC|CAR|GLS|MER|NCP|SIN|STG|TAN:[] AIT

gtbin: WARNING: No spacecraft file: EXPOSURE keyword will be set equal to ontime.

prompt>

There are many different possible projection types. For a small region the difference is small, but you should be aware of it.

When you start the tool, gtbin first asks which type of output you want.

In this case, we want a counts map so we:

- Select CMAP.

The CCUBE (counts cube) option produces a set of count maps over several energy bins.

- Provide an output file name.

- Specify NONE for the spacecraft file as it is not needed for the counts map.

- Input image size and scale in pixels and degrees/pixel.

Note: We select a 400x400 pixel image with 0.1 degree pixels in order to create an image that contains all the extracted data.

- Enter the coordinate system, either celestial (CEL) or galactic (GAL), to be used in generating the image. The coordinates for the image center (next bullet) must be in the indicated coordinate system.

- Enter the coordinates for the center of the image, which here correspond to the position of 3C 279.

- Enter the rotation angle (0).

- Enter the projection method for the image. See Calabretta & Greisen 2002, A&A, 395, 1077 for definitions of these projections.

Here is the output counts map file to use for comparison.

Compare this result to the images made with fv and ds9 and you will notice that the image is flipped along the y-axis. This is because the coordinate system keywords have been properly added to the image header and the Right Ascension (RA) coordinate actual increases right to left and not left to right. Moving the cursor over the image now shows the RA and Dec of the cursor position in the FK5 fields in the top left section of the display.

If you want to look at coordinates in another system, such as galactic coordinates, you can make the change by first selecting the 'WCS' button (in the first row of buttons), and then the appropriate coordinate system from the choices that appear in the second row of buttons (FK4, FK5, IRCS, Galactic or Ecliptic).

3. Looking at the Exposure

In this section, we explore ways of generating and looking at exposure maps. If you have not yet run gtmktime on the data file you are examining, this analysis will likely yield incorrect results. It is advisable to prepare your data file properly by following the Data Preparation tutorial before looking in detail at the livetime and exposure.

Generally, to look at the exposure you must:

- Make an livetime cube from the spacecraft data file using gtltcube.

- As necessary, merge multiple livetime cubes covering different time ranges.

- Create the exposure map using the gtexpmap tool.

- Examine the map using ds9.

Calculate the Livetime

In order to determine the exposure for your source, you need to understand how much time the LAT has observed any given position on the sky at any given inclination angle. gtltcube calculates this 'livetime cube' for the entire sky for the time range covered by the spacecraft file. To do this, you will need to make the livetime cube from the spacecraft (pointing and livetime history) file, using the gtltcube tool.

prompt> gtltcube

Event data file [] 3C279_region_filtered_gti.fits

Spacecraft data file [] spacecraft.fits

Output file [] 3C279_region_ltcube.fits

Step size in cos(theta) (0.:1.) [] 0.025

Pixel size (degrees) [] 1

Working on file spacecraft.fits

.....................!

prompt>

Notes:

- Entries include:

- Name of the event data file, and the spacecraft file to use

The event file is needed to get the time range over which to generate the livetime cube.

- Output file name

- Size of the bins to use in accumulating the livetime (defaults are typically sufficent)

- Name of the event data file, and the spacecraft file to use

- Some values such as "0.1" are known to give unexpected results for "Step size in cos(theta)". Use 0.099 instead.

Combining multiple livetime cubes:

In some cases, you will have multiple livetime cubes covering different periods of time that you wish to combine in order to examine the exposure over the entire time range. One example would be the researcher who generates weekly flux datapoints for light curves, and has the need to analyze the source significance over a larger time period. In this case, it is much less CPU-intensive to combine previously generated livetime cubes before calculating the exposure map, than to start the livetime cube generation from scratch. To combine multiple livetime cubes into a single cube use the gtltsum tool.

Note: gtltsum is quick, but it does have a few limitations, including:

- It will only add two cubes at a time. If you have more than one cube to add, you must do them one at a time.

- It does not allow you append to or overwrite an existing livetime cube file.

For example if you want to add four cubes (c1, c2, c3 and c4), you cannot add c1 and c2 to get cube_a, then add cube_a and c3 and save the result as cube_a, you must give it a different name.

- The calculation parameters that were used to generate the livetime cubes (step size and pixel size) must be identical between the livetime cubes.

Here is an example of adding two livetime cubes from the first and second halves of the six months of 3C 279 data (where the midpoint was 247477908 MET):

prompt> gtltsum

Livetime cube 1 or list of files[] 3C279_region_first_ltcube.fits

Livetime cube 2[none] 3C279_region_second_ltcube.fits

Output file [] 3C279_region_summed_ltcube.fits

prompt>

Generate an Exposure Map or Cube

Once you have a livetime cube for the entire dataset, you need to calculate the exposure for your dataset. This can be in the form of an exposure map or an exposure cube.

- Exposure maps are mono-energetic, and each plane represents the exposure at the midpoint of the energy band, not integrated over the band's energy range. Exposure maps are used for unbinned analysis methods. You will specify the number of energy bands when you run the gtexpmap tool.

- Exposure cubes are used for binned analysis methods. The binning in both position and energy must match the binning of the input data file, which will be a counts cube. When you run the gtexpcube2 tool, you must be sure the binning matches.

For simplicity, we will generate an exposure map by running the gtexpmap tool on the event file. As six months is far too much data for an unbinned analysis, this computation will take a long time. Skip past this section to find a copy of the output file.

gtexpmap allows you to control the exposure map parameters, including:

- Map center, size, and scale

- Projection type (selection includes Aitoff, Cartesian, Mercator, Tangential, etc.; default is Aitoff)

- Energy range

- Number of energy bins

The following example shows input and output for generating an exposure map for the region surrounding 3C 279.

prompt> gtexpmap

The exposure maps generated by this tool are meant

to be used for *unbinned* likelihood analysis only.

Do not use them for binned analyses.

Event data file[] 3C279_region_filtered_gti.fits

Spacecraft data file[] spacecraft.fits

Exposure hypercube file[] 3C279_region_ltcube.fits

output file name[] 3C279_exposure_map.fits

Response functions[] P8R3_SOURCE_V3

Radius of the source region (in degrees)[] 30

Number of longitude points (2:1000) [] 500

Number of latitude points (2:1000) [] 500

Number of energies (2:100) [] 30

The radius of the source region, 25, should be significantly larger

(say by 10 deg) than the ROI radius of 20

Computing the ExposureMap using 3C279_exposure_cube.fits

....................!

prompt>

You are prompted for the:

- Name of an events file to determine the energy range to use.

- Name of the exposure cube file to use.

- Name of the output file and the instrument response function to use.

For more discussion on the proper instrument response function (IRF) to use in your data analysis, see LAT IRFs Overview, as well as the current recommended data selection information from the LAT team. The LAT data caveats are also important to review before starting LAT analysis.

The next set of parameters specify the size, scale, and position of the map to generate.

- The radius of the 'source region'

The source region is different than the region of interest (ROI). This is the region that you will model when fitting your data. As every region of the sky that contains sources will also have adjacent regions containing sources, it is advisable to model an area larger than that covered by your dataset. Here we have increased the source region by an additional 10°, which is the minimum needed for an actual analysis. Be aware of what sources may be near your region, and model them if appropriate (especially if they are very bright in gamma rays).

- Number of longitude and latitude points

- Number of energy bins that will have maps created

This number can be small (∼5) for sources with flat spectra in the LAT regime. However, for sources like pulsars that vary in flux significantly over the LAT energy range, a larger number of energies is recommended, typically 10 per decade in energy.

Here is the output exposure map generated in this example.

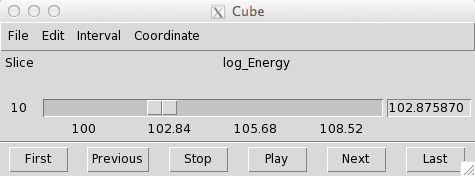

Once the file has been generated, it can be viewed with ds9. When you open the file in ds9, a "Data Cube" window will appear, allowing you to select between the various maps generated.

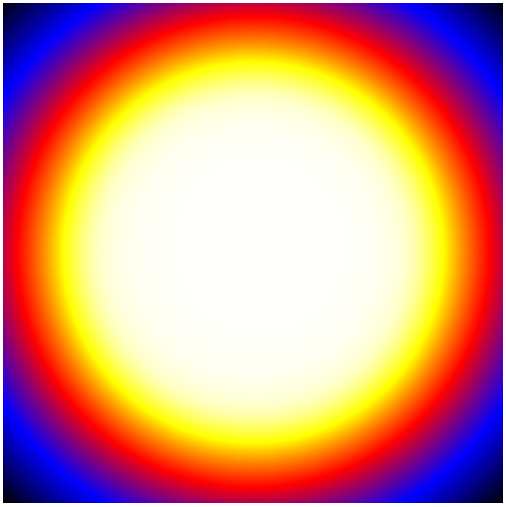

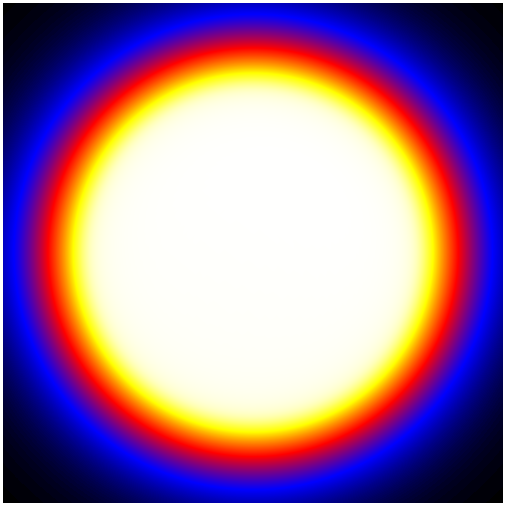

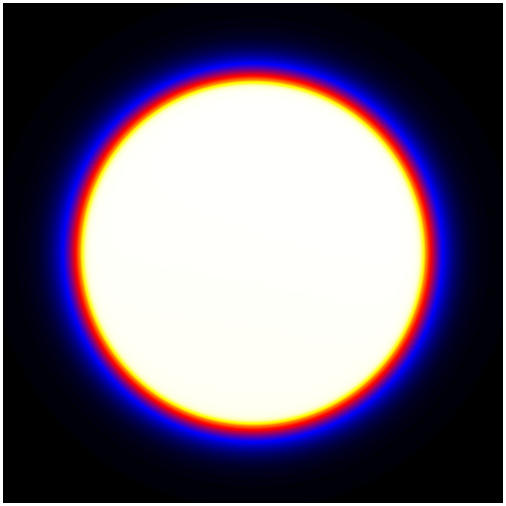

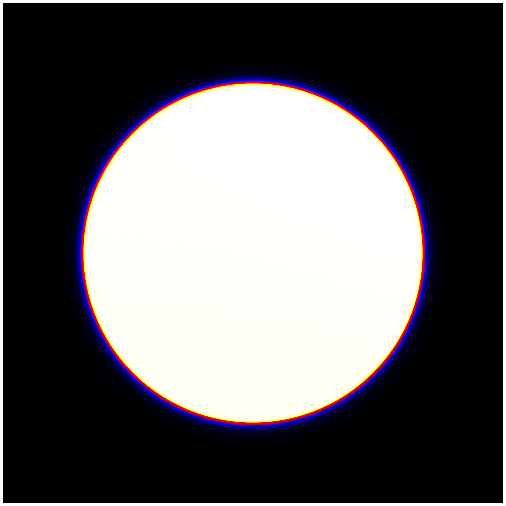

Below are four of the 30 layers in the map, scaled by log(Energy), and extracted from ds9.

| First Layer | Fourth Layer |

| Tenth Layer | Last Layer |

As you can see, the exposure changes as you go to higher energies. This is due to two effects:

- The PSF increases at lower energies, making the wings of the exposure expand well outside the region of interest. This is why it is necessary to add at least 10 degrees to your ROI. In this case, you can see that even 10 degrees has not captured the full effect of the PSF.

- The change in the effective area of the LAT instrument as you go to higher energies.

Both of these effects are quantified on the LAT performance page.

Last updated: 10/04/2018